Here’s the new trailer for “In Your Dreams” Finished at Water Tower Color. Very excited for this one to be released in November!

The Wizard of Oz at Sphere

Absolutely honored to have contributed with the Water Tower Color team on "The Wizard of Oz" for the MSG Sphere. This project represents a paradigm shift in immersive entertainment.

A massive congratulations to the entire post-production team, the forward-thinking visionaries at MSG Sphere, and the brilliant minds at Google DeepMind who powered this spectacle. This was a monumental collaboration, and the result speaks for itself. We didn't just raise the bar; we created a new one.

Congratulations again to all involved. The future of immersive entertainment is bright, and I can't wait to see what we all create next.

Click through to YouTube to use your phones accelerometer to move the view around.

Here is the original proof of concept I made over two and half years ago. It was truly amazing to see how far it had come at the premier last night. Make sure to check it out next time you are in Vegas!

Post Perspective - Color Pipeline: Virtual Roundtable

Here is a Q&A I was recently included in. Check out the full article here at postperspective.com

Warner Bros. Post Creative Services Colorist John Daro

Warner Bros. Post Production Creative Services is a post house on the Warner Bros. lot in Burbank. “We specialize in feature films and high-end episodic projects, with picture and sound finishing under one roof. We also have editorial space and visual effects offices just one building over, so we truly are a one-stop shop for post.”

What does your setup look like tools-wise?

I have been a devotee of FilmLight’s Baselight for the past five years. It is the beating heart of my DI theater, where I project images onto a 4K Christie projector and monitor them on two Sony X300s. For that “at-home” consumer experience, I also have a Sony A95K.

Although I spend 90% of my time on Baselight, there are a few other post-software necessities for my craft. I call my machine the “Swiss army box,” a Supermicro chassis with four Nvidia A6000s. I use this machine to run Resolve, Mistika, Photoshop, and Nuke. It also makes a fine dev box for my custom Python tools.

I always say, “It’s not the sword; it’s the samurai.” Use the right tool for the right job, but if you don’t have the right tool, then use what you’ve got.

Do you work in the cloud? If so, can you describe that workflow and the benefits?

Not really. For security reasons, our workstations are air-gapped and disconnected from the outside world. All media flows through our IO department. However, one cloud tool I do use is Frame.io, especially for the exchange of notes back and forth. I really like how everything is integrated into the timeline. It’s a super-efficient way to collaborate. In addition to those media uploads, the IO team also archives finished projects and raw scans to the cloud.

I do think cloud workflows are gaining steam, and I definitely have my eye on the space. I can envision a future where we send a calibrated Sony X3110 to a client and then use Baselight in the cloud to send JPEG XS straight to the display for remote approvals. It’s a pretty slick workflow, and it also gets us away from needing the big iron to live on-prem.

Working this way takes geography out of the equation too. I would love to work from anywhere on the planet. Bring on the Tiki drinks with the little umbrellas somewhere in the tropics with a laptop and a Mini Panel. All joking aside, it does open the talent pool to the entire world. You will be able to get the best artists regardless of their location. That’s an exciting prospect, and I can’t wait to see what the future holds for this new way of looking at post.

Do you often create LUTs for a project? How does that help?

I mostly work with curves and functions to do my transforms, but when on-set or editorial needs a preview of what the look will be in the room, I do bake LUTs out. They are especially critical for visual effects reviews and dailies creation.

There’s a film project that I’m working on right now. We’re doing a scan-once workflow on that show to avoid overly handling the negative. Once scanned, there is light CDL grading, and a show LUT is applied to the raw scans to make editorial media. The best looks are the ones that have been developed early and help to maintain consistency throughout the entire workflow. That way, you don’t get any surprises when you get into the final grade. Temp love is a thing… LUTs help you avoid loving the wrong thing.

Do you use AI as part of your daily job? In what way?

I do use a bit of AI in my daily tasks, but it’s the AI that I’ve written myself. Originally, I started trying to make an automated dust-buster for film restoration. I failed miserably at that, but I did learn how to train a neural net, and that led to my first helpful tool.

I used an open-source image library to train an AI up-rezer. Although this is commonplace now, back then, it was scratching an itch that hadn’t been scratched yet. To this day, I do think my up-rezer is truer to the image and less “AI”-feeling than what’s available off the shelf.

After the up-rezer, I wrote Match Grader in 2020, which essentially takes the look and vibe from one shot and applies it to another. I don’t use it for final grading, but it can be very useful in the look-dev process.

Building on what I had learned coding Match Grader, I subsequently developed a process to use machine vision to create a depth channel. This turns your Power Windows from circles and squares into spheres and cubes. It is a very powerful tool for adding atmosphere to images. When these channels are available to me, one of my favorite moves is to desaturate the background while increasing the contrast in the foreground. This adds dimension to your image and helps to draw your eye to the characters where it was intended.

These channels can also aid in stereo compositing, but it’s been a minute since I have had a 3D job cross my desk that wasn’t for VR.

Machine vision segmentation with YOLO. 16fps @4k

Lately, I have been tinkering with an open-source library called YOLO (You Only Look Once.) This software was originally developed for autonomous driving, but I found it useful for what we do in color. Basically, it’s a very fast image segmenter. It returns a track and a matte for what it identifies in the frame. It doesn’t get everything right all the time, but it is very good with people, thankfully. You wouldn’t use these mattes for compositing, but they are great for color, especially when used as a garbage matte to key into.

I have also recently refreshed my AI uprezer. I built in some logic that is somewhat “intelligent” about the source coming in. This way the process is not a one size fits-all operation.

SamurAI Image Restoration

It can auto-detect interlace and cadence now and can perform a general analysis of the quality of the picture. This allowed me to throttle the strength and end up with the perfect amount of enhancement on a case-by-case basis. The new tool is named SamurAI.

If given an example from another show or work of art, what is the best way to emulate that?

It’s good to be inspired, but you never want to be derivative. Often, we take many examples that all have a common theme or feeling and amalgamate them into something new.

That said, sometimes there are projects that do need a literal match. Think film emulation for a period effect. People can approach it in two ways. First — the easiest way, while also being more complicated — is to get a hold of some of the stock you are emulating. Next, you expose it with color and density patches and then develop and measure the strip. If you read enough points, then you can start to interpolate curves from the data.

FilmLight can help with this, and back in my lab days, that is exactly whose software we used. Truelight was essential back in the early days of DI, when the “I” was truly the intermediate digital step between two analog worlds.

The second way I approach this task would be to use my Match Grader software. I can push the look of our references to some of the production footage. Match Grader is a bit of a black box in that it returns a completed graded image but not the recipe for getting there. This means the next step would be to bring it into the color corrector and match it using curves, keys, and scopes. The advantage of doing it this way instead of just matching it to the references is that you are working with the same picture, which makes it easier to align all the values perfectly.

Oh, or you can just use your eyeballs. 😉

Do your workflows include remote monitoring?

Not only do they include it, but there was a time in the not-too-distant past when that was the only option. We use all the top solutions for remote sessions, including Streambox, Sohonet ClearView, Colorfront and T-VIPS. The choice really comes down to what the facility on the catching side has and the location of the client. At the moment, my preference is Streambox. It checks all the boxes, from 4K to HDR. For quick approvals, ClearView is great because all we need on the client side is a calibrated iPad Pro.

What film or show or spot resonates with you from a color perspective?

Going back to my formative years, I have always been drawn to the austere beauty of Gattaca. The film’s use of color is simply flawless. Cinematographer Sławomir Idziak is one of my favorites, and he has profoundly influenced my work. I love Gattaca’s early flashbacks, in particular. I have been gravitating in that direction ever since I saw the picture.

Gattaca

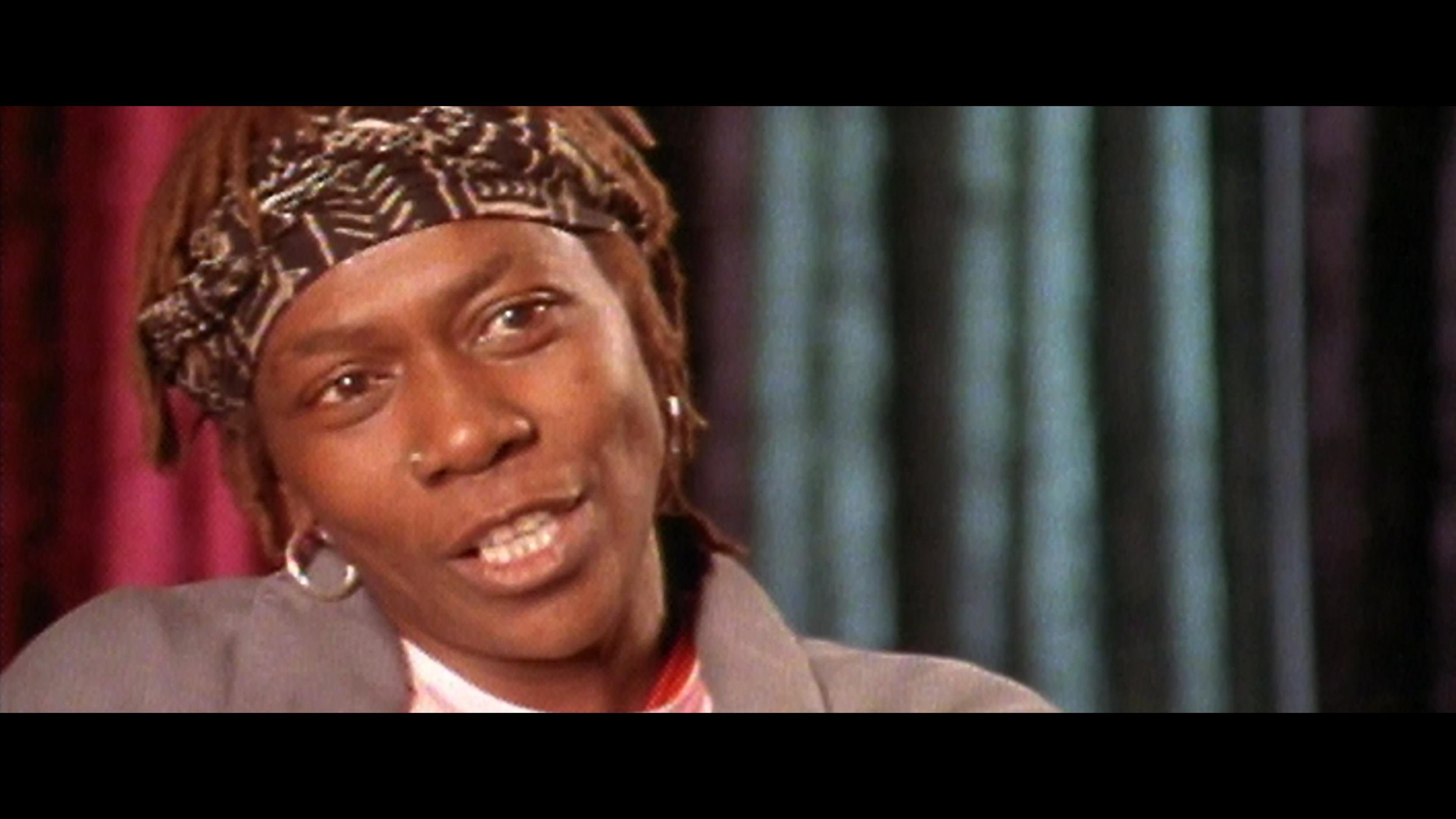

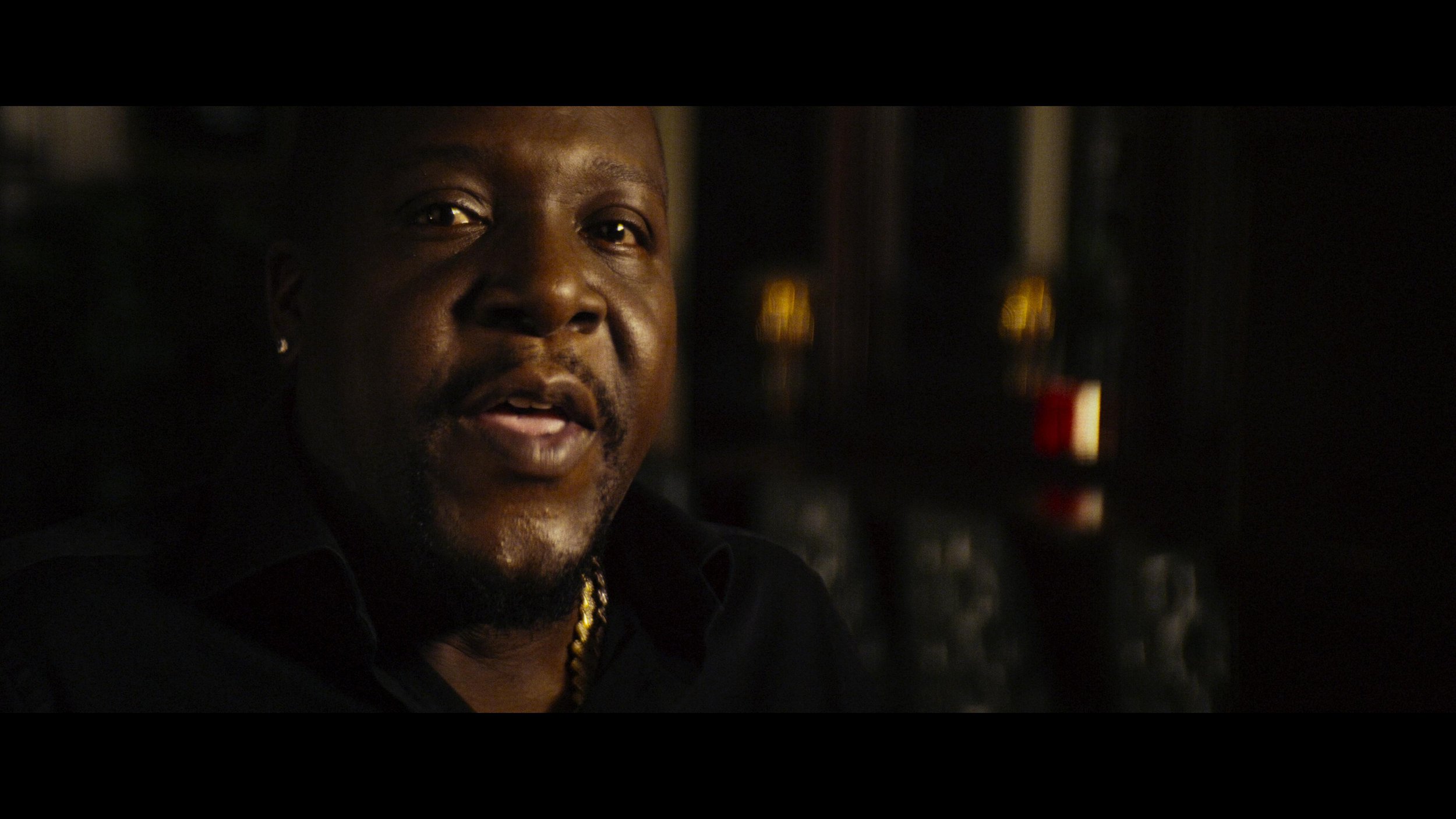

Magic Mike

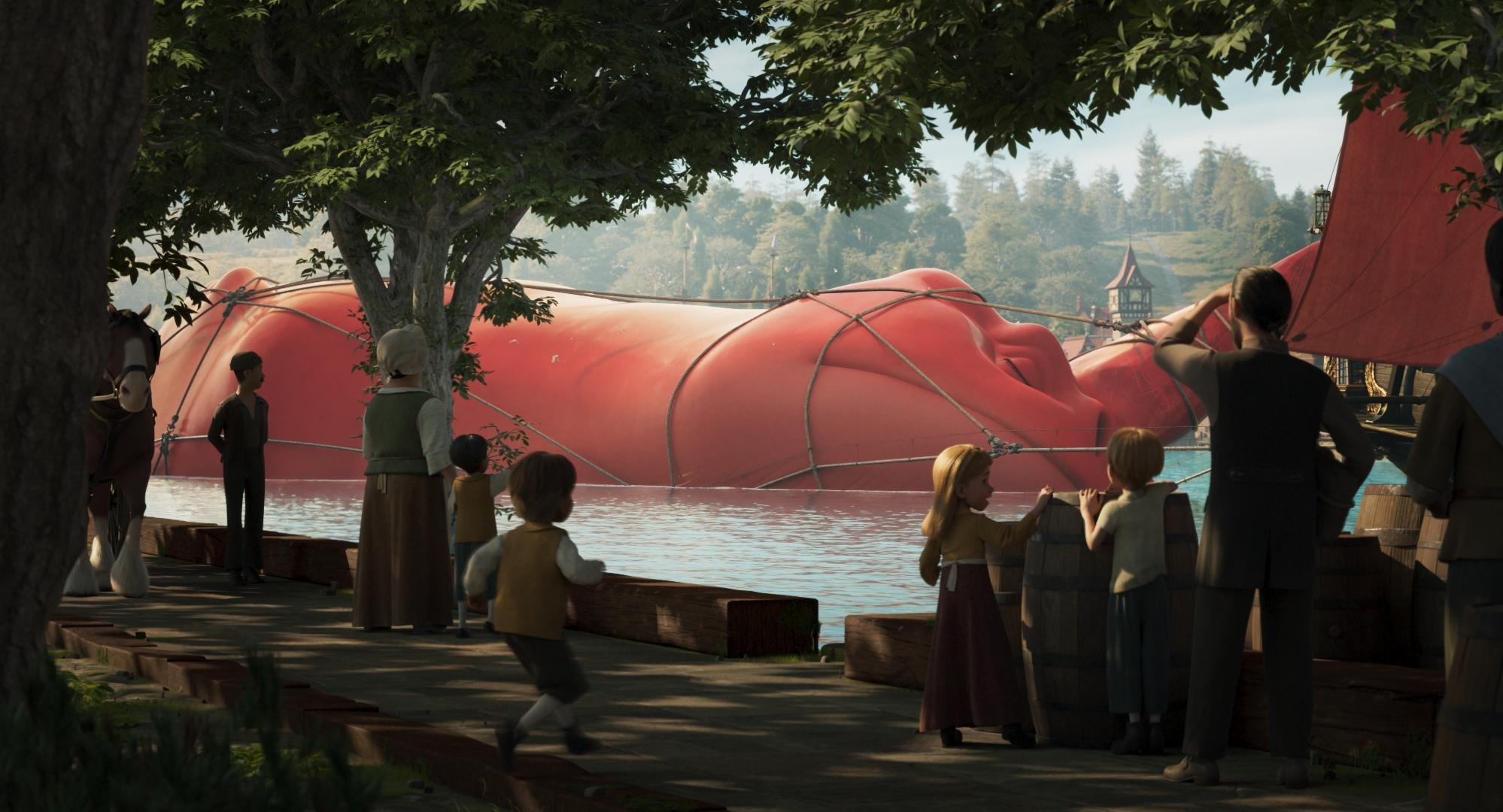

The Sea Beast

You can see a bit of Gattaca‘s influence in my own work on Steven Soderbergh’s Magic Mike and even a little bit on the animated film The Sea Beast, directed by Chris Williams.

Gattaca

The Sea Beast

I am always looking for new ways to push the boundaries of visual storytelling, and there are a ton of other films that have inspired me, but perhaps that’s a conversation for another time. I am grateful for the opportunity to have worked on projects that I have, and I hope that my work will continue to evolve, inspire and be inspired in the years to come.

Congratulations "Dear Mama" Emmy Nomination

“Dear Mama” Emmy nomination for Best Documentary or Nonfiction Series

Congratulations to Allen Hughes and the Dear Mama team on their well-deserved recognition. I am crossing my fingers for an Emmy win on the day!

https://www.hollywoodreporter.com/tv/tv-news/2023-emmys-nominations-nominees-list-1235533766/

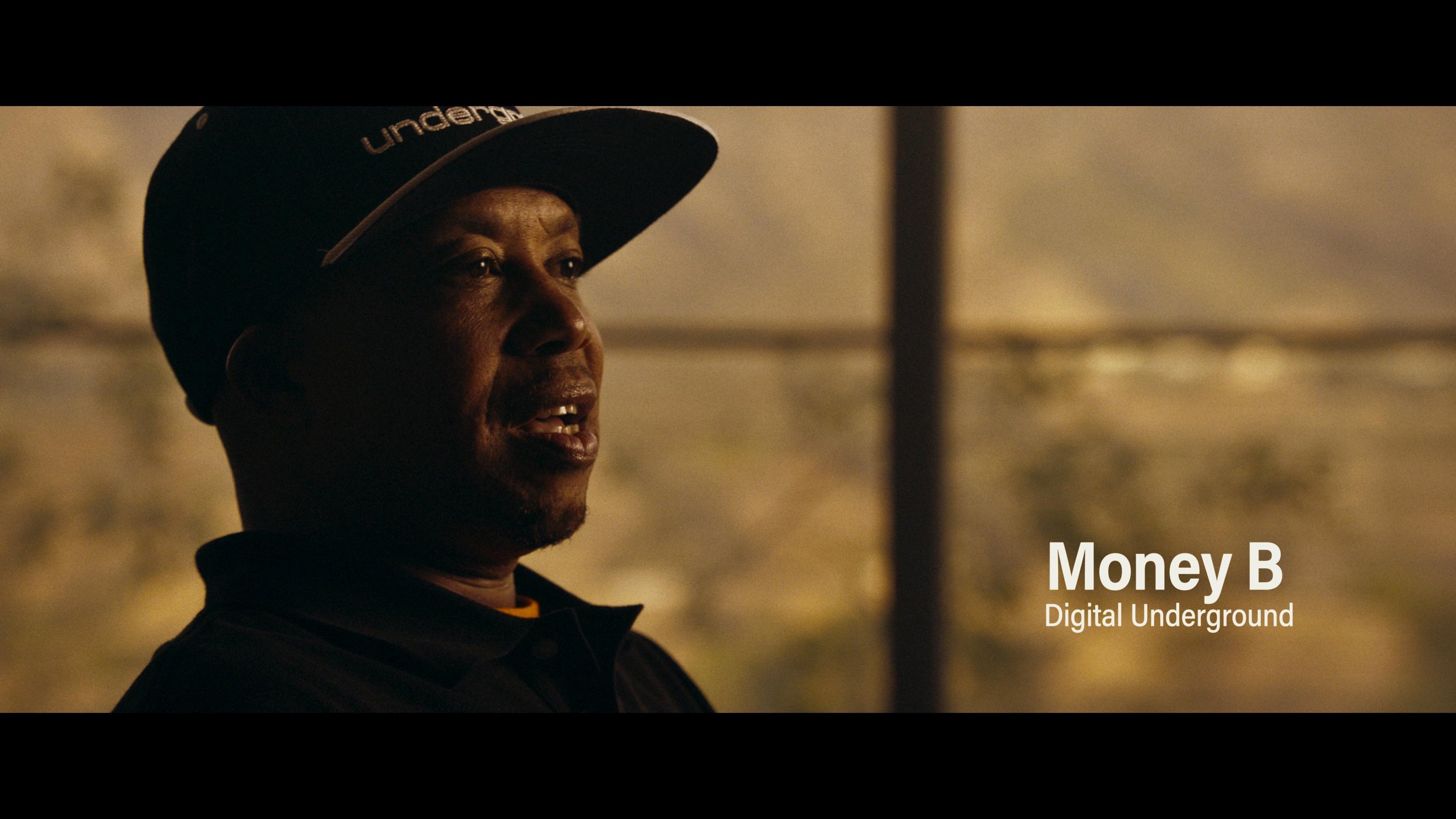

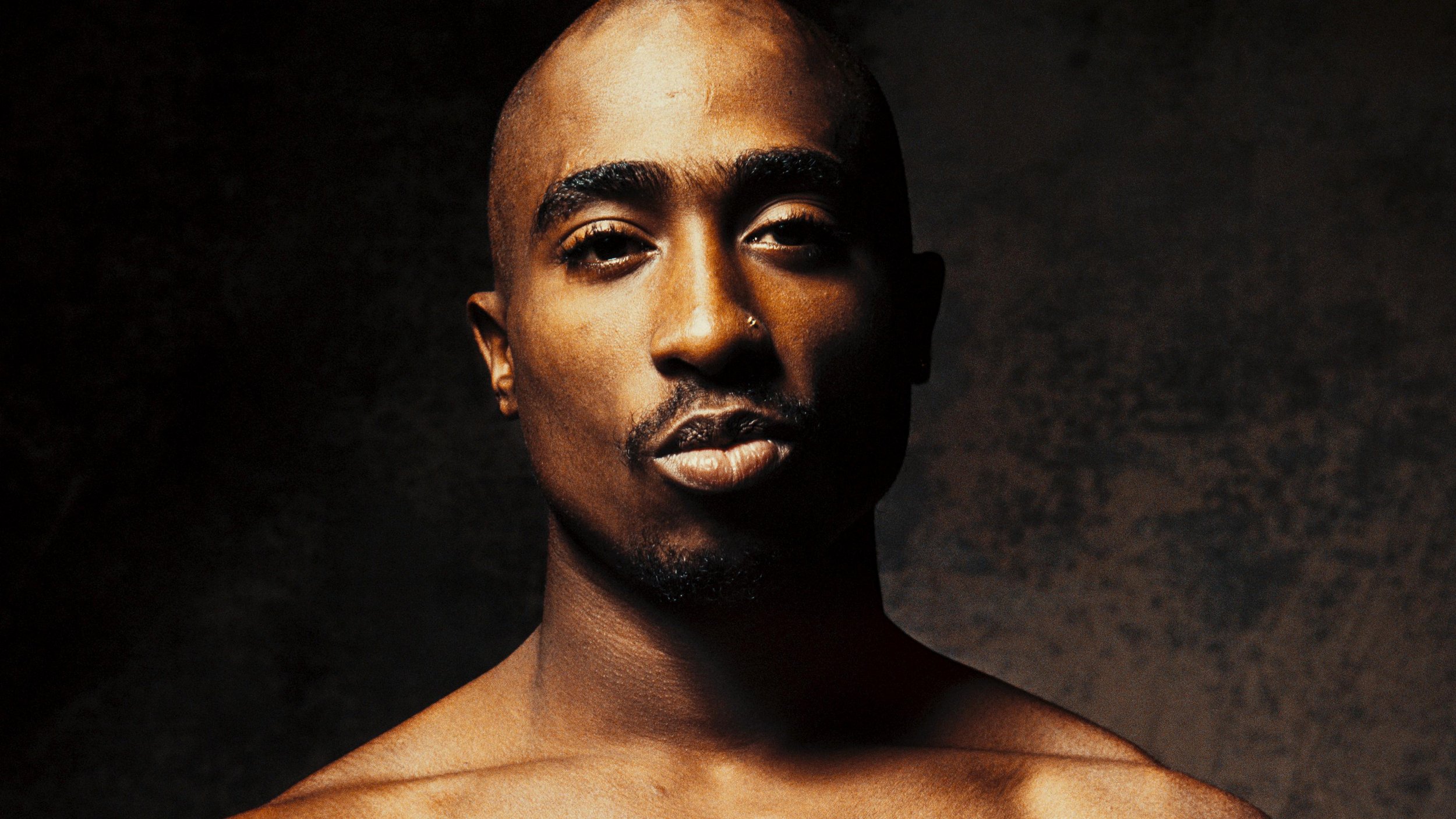

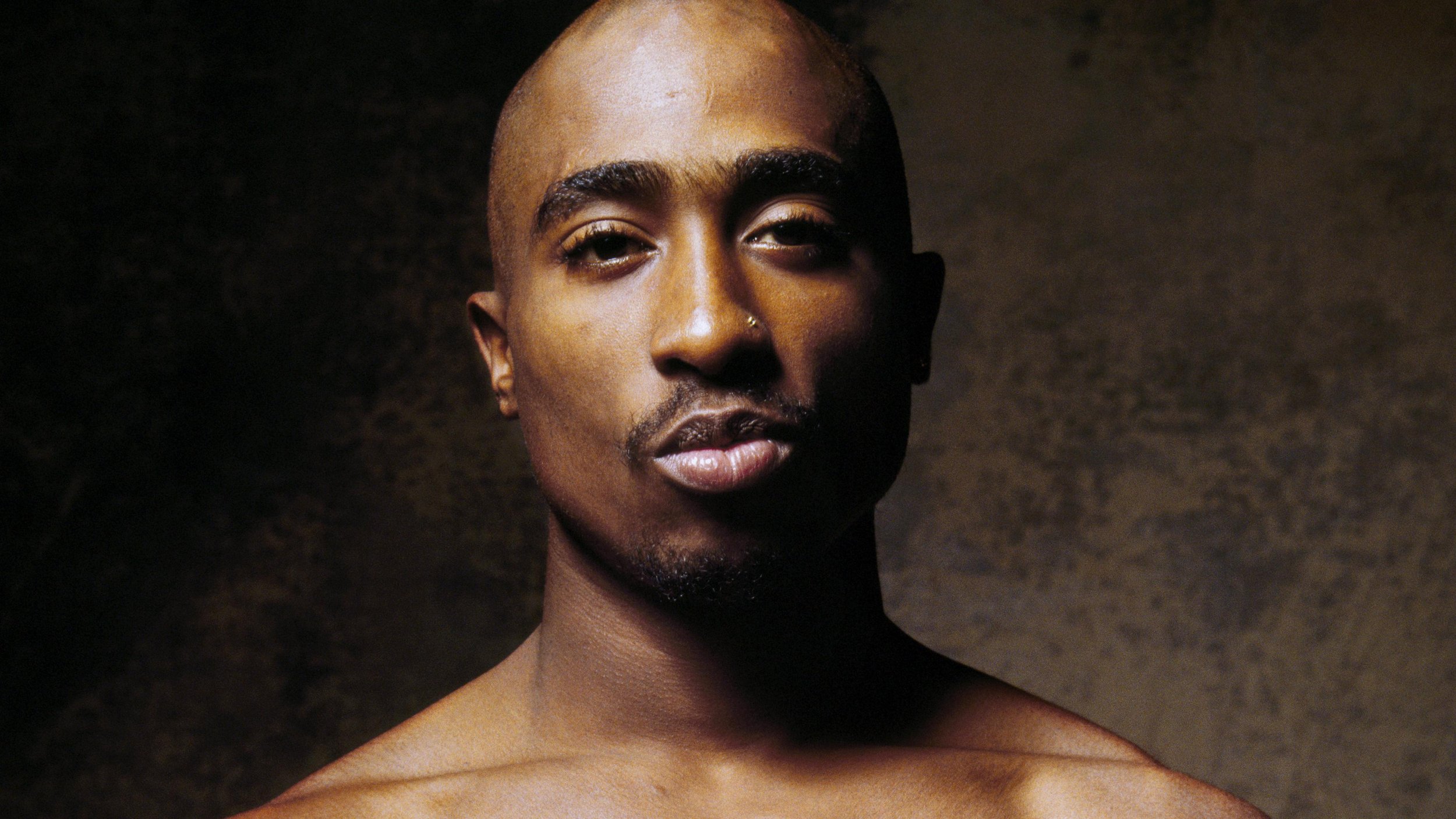

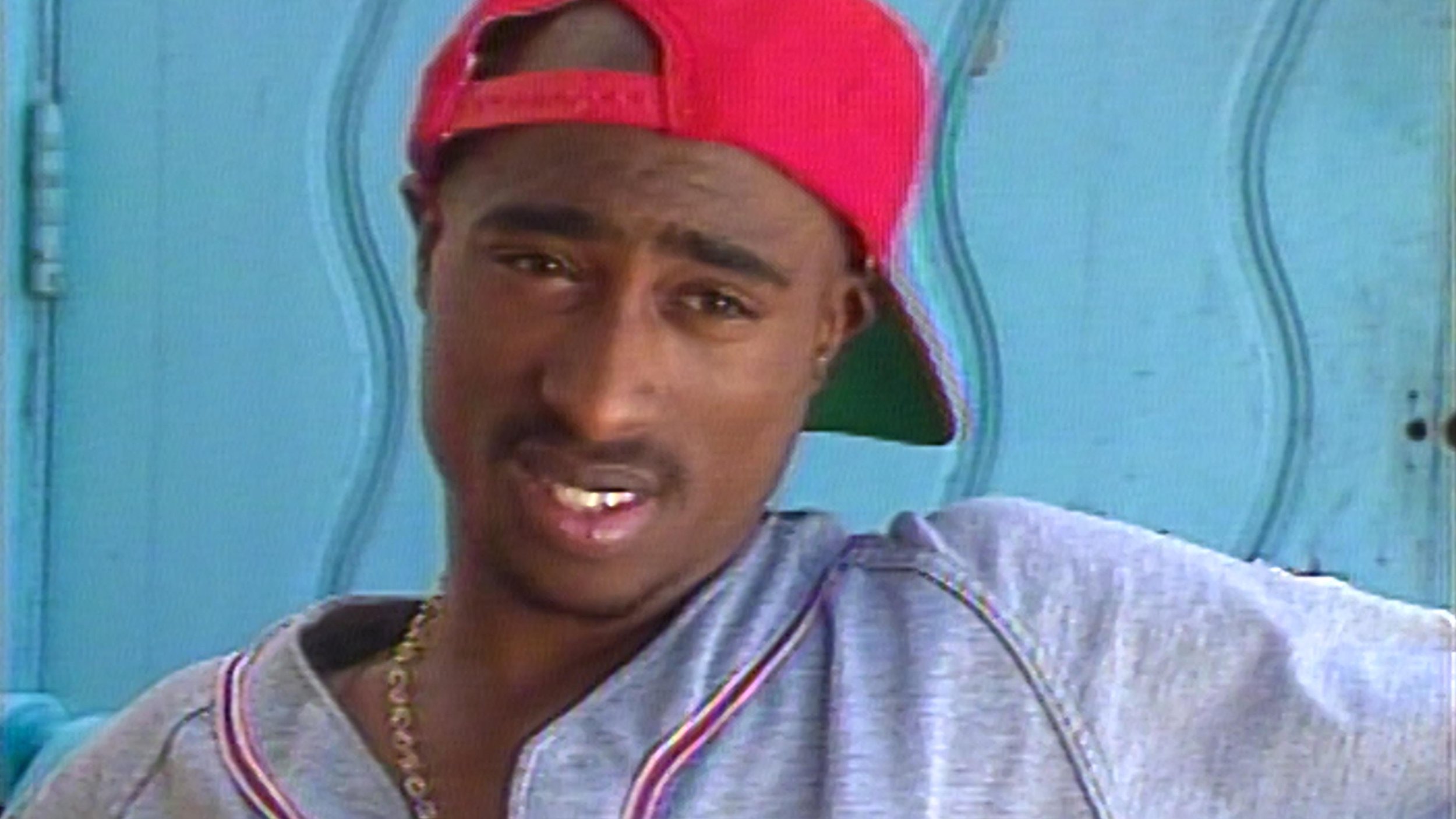

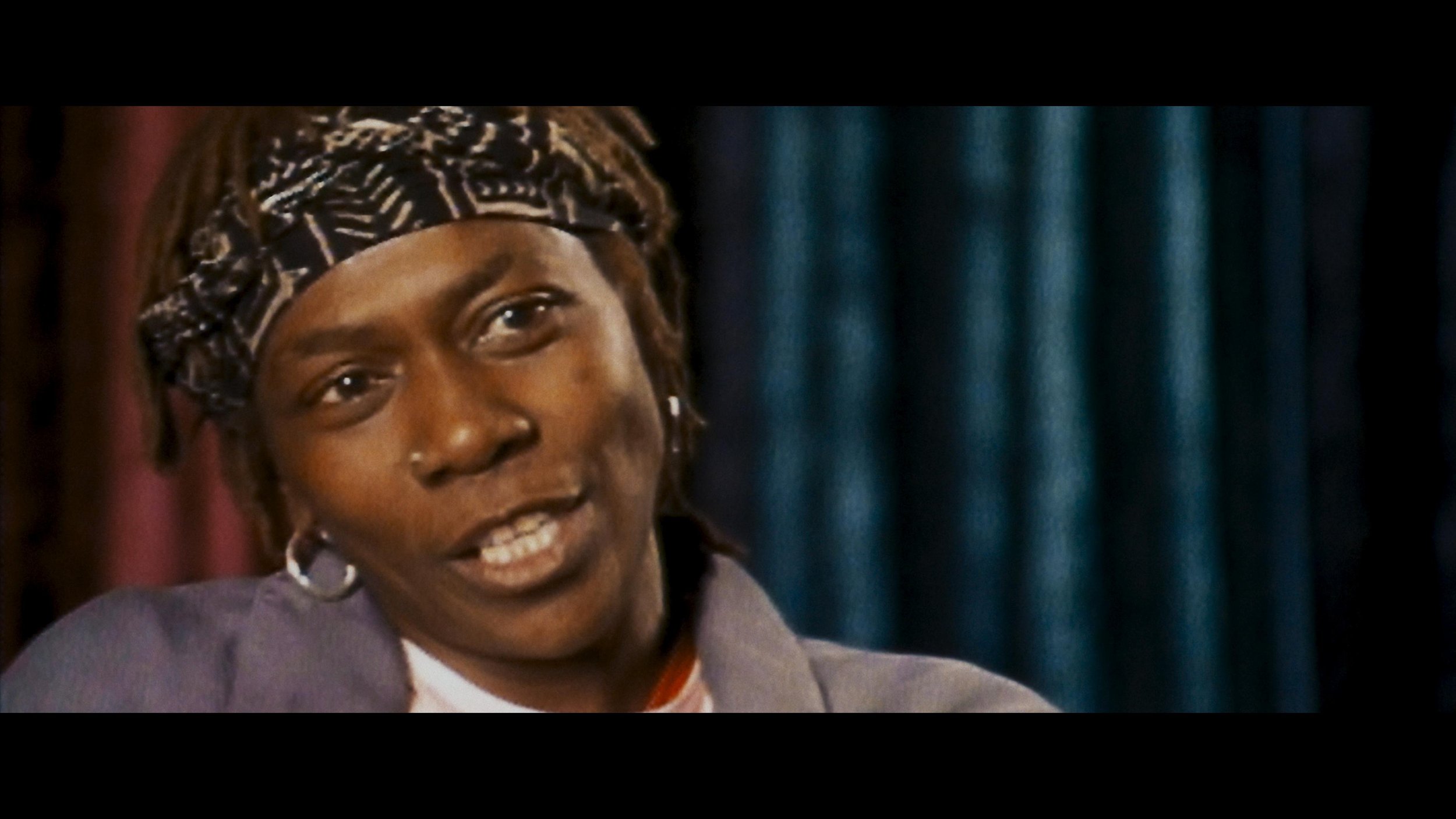

Dear Mama

7/12 - Update: “Dear Mama” Emmy nomination for Best Documentary or Nonfiction Series

Well, if you are my age and grew up in the ’90s, you listened to Tupac. Even all of us white boys from Camarillo knew every word to every song. His music really was the soundtrack to the last decade of the millennium and my youth.

Tonight is the final installment of “Dear Mama.” FX’s most-watched unscripted show.

Perfect for a Mother’s Day weekend! Please go check it out on FX tonight or streaming on Hulu.

Allen Hughes directed this insightful docuseries. Fitting because Allen and his brother directed Tupac’s early music videos. Sure, there was a bit of drama, but that adds to the flavor of the story. That connection to the material made Hughes the quintessential choice for captaining this ship. Tupac wasn’t any one thing; more like an eclectic stew of many influences and identities. One thing is for sure. Dude was thug life for real.

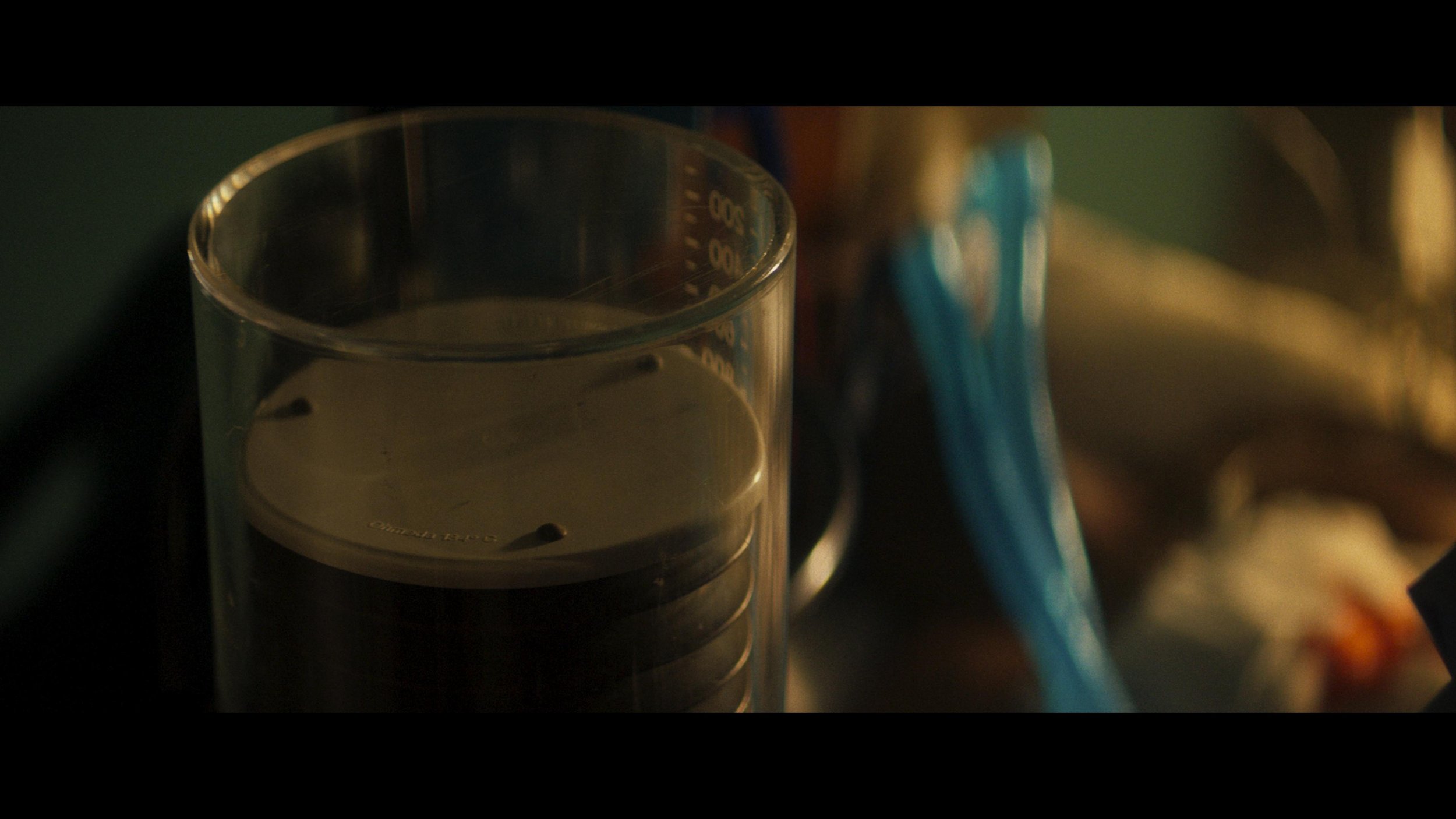

Cognac hues or Hughes as it were

Allen was clear on the look and vibe he wanted for the series. Cognac was the word. We spent a couple of weeks developing a look that feels like you have filtered the light through a fine liquor. We also used Live Grain to achieve that end-of-the-film-era perfect Kodak grain structure of the 90s.

Documentary grading is an entirely different beast. Here are a few tips for you to tackle your next interview-based production.

Color management - I preach this a lot, but even more critical with many different sources.

Sounds basic, but group your interviews.

Normalize the frame rate upfront.

AI up-rez is like salt; a little is good, but too much ruins the dish. Don’t be afraid to let some pictures just look old.

Build a KEM reel of all interview setups. Having the A and B cam shots together in the timeline will help you reference grades quickly.

The first step was look development. Allen had already shot some of the interviews we used to refine the look. I built an LMT that had the cognac golden vibe. I used that look and the ACES standard outputs to create a 709 LUT for Avid media creation. Eric DeAzevedo was the operator responsible for many terabytes of dailies. We also normalized all the archival footage to 23.98 during the dailies step. Cortex was used to make the mxf files and bins. We had to double-hop to render in LiveGrain since it wasn’t supported in Cortex at the time.

Early on, we were still in the late stages of the COVID lockdown. I built a reel of every interview setup and had a ClearView session with Hughes and Josh Garcia (Producer). This scene was super critical to our success going forward. It set the bible for the show's look and ensured that Allen’s vision was consistent through the many days of shooting. At the start of each episode, I applied our base settings using a “Fuzzy” match. (yes, that is a real Baselight thing.) Basically, “Fuzzy” is a setting that allows the machine to match grades presumed to be from the same camera roll rather than a timecode approach. This put all the interviews 90% of the way there from the get-go. The next step was to sort the timeline by clip name and time of day. I would then work through a pass where I would track the shapes and balance out any inconsistencies in lighting as the sun hung lower throughout the day. The archival footage didn’t have as graceful of a strategy applied. Each shot was its own battle as the quality differed from source to source. My main goal was to ensure that it was cohesive and told the story Allen was crafting.

The first deliverable out of the gate was a theatrical version for the Toronto International Film Festival. I graded in ACES cc going out to PQ 1000nits. Then that was run through the DoVi analysis, and a P3D65 48nit version was trimmed. Finally, we applied a P3D65 to XYZ lut on the output render to create the DCDM.

The biggest challenge of this show was keeping up with editorial. As you can imagine, documentary storytelling is honed in the edit bay. The edit was constantly being updated as shots were cleared or discovered. Back at my shop, Leo Ferrini would constantly update my project to chase editorial. Multi-Paste (Remote Grades for our Resolve friends) was clutch in this situation. We took the old grades and copied them across. Leo would categorize the new material so I could sort the scene for the changes. The timelines constantly evolved and took shape until we got Allen in for the final grade. Allen has a great eye and religiously kept us in the world he had envisioned. We paid particular attention to eye-trace and ensured the information from each visual told a straightforward story without distraction. Next was a pass of Dolby trimming to take the approved PQ to 709. We would send that 709 file to Allen and get notes before creating the final IMF for delivery.

A super big thanks to Paul Lavoie for managing this one. There were many moving parts on this production but thanks to him, I rarely felt it. It’s a blessing to have a partner that doesn’t mind getting his hands dirty even though he’s one of the suits😜.

Be sure to check out this killer doc about one of our generation’s most prolific artists, told through Hughes's equally unparalleled artistic voice. Allen is a true master of many formats but has solidified his place as one of the best documentarians. Thanks for taking the time to peek behind the curtain, and let me know what you think.

Here are some more before and afters. Mellow yella’ Dan Muscarella would have been proud.

How To - Dolby Vision

Dolby Vision - How To and Best Practices

What is Dolby Vision

Dolby Vision is a way to dynamically map HDR to different display targets. At its core, the system analyzes your media and transforms it to ranges less than your mastering target, typically SDR 100 nits.

Project Setup

The first step is to license your machine. Once that is in place you need to set up your project. Go into settings and set your CMU(Content Mapping Unit) version. Back in the day, we used an external box, but nowadays the software does it internally. You will also need to set which version. V4 is the current iteration whereas V2.9 is a legacy version that some older TVs use. Finally set your Mastering Display. That is a Sony x300 in my case which is set up for PQ P3 D65 1000 nits.

Baselight Project Setup

Resolve Project Setup

It’s not what you said, it’s your tone

The goal is to make our HDR master look good on an SDR display. To do this we need to tone map our HDR ranges to the corresponding SDR ranges. This is a nonlinear relationship and our shadows mid-tones and highlights will land in the wrong areas if we don’t tone map them first. See below for an example of an SDR image that has not been tone mapped correctly. You can see the highlights are way too hot. Now we could use a curve and shape our image to a discreet master for SDR, but most studios and streamers are requesting a Dolby delivery regardless if a separate SDR grade was made. Plus, Dolby does a pretty decent job of getting you there quickly since the v4 release.

The first step is to analyze your footage. This will result in three values that will set a tone curve, min, max, and average. These values inform the system how to shape the curve to get a reasonable rendering of your HDR master in SDR.

Image courtesy of Dolby

What we are trying to do here is fit 10 pounds of chicken into an 8-pound bag. Something has to give, usually the bones but the goal is to keep as much chicken as you can. Rather than toss data out, we instead compress it. The system calculates the min, max, and average light levels. The idea is to keep your average or the “meat and potatoes” of your shot intact while compressing the top and bottom ranges. The end result is an SDR image that resembles your HDR only flatter.

How a colorist goes about the analysis is just as important as the analysis itself. This is going to get into a religious debate more than a technical one and everything from this point on is my opinion based on my experiences with the tech. Probably not what Dolby would say.

The original design of the system wanted you to analyze every shot independently. The problem with this approach is it can take a consistent grade and make it inconsistent depending on the content. Say you had two shots from the same scene.

One side of the coverage was shooting the character with a blown-out window behind them. The other side shoots into the darker part of the house. Now even though you as a colorist have balanced them to taste, the Dolby analysis will have two very different values for these shots. To get around this, I find it is better to average the analysis for each scene vs doing independent shots. The first colorist I saw work this way was my good friend and mentor Walter Volpatto. He went toe to toe with Dolby because his work was getting QC rejections based on his method. He would analyze only a grey ramp with the d-min and d-max values representing his media and apply that to his entire timeline. His thought process was if it was one transform to HDR it should be one transform down.

Most studio QC operations now accept this approach as valid metadata (Thank you, Wally!) While I agree with his thought process, I tend to work based on one analysis per scene. Resolve has this functionality built in. When I’m working in Baselight I set it up this way and copy the scene averaged analysis to every shot in preparation for the trim.

Scene average analysis in Baselight.

Setting the tone

Now that your analysis is complete it’s time to trim. First, you need to set what display output your trim is targeting and the metadata flag for the intended distribution. You can also set any masking that was used so the analysis doesn’t calculate the black letterbox pixels. The most common targets are 709 100nits, P3 48nits, and PQ 108nits. The 709 trim is for SDR home distribution whereas the other two are for theatrical distribution. The reason we want to keep the home video and cinema trims separate is that displays that fall in between two trim targets will be interpolated. You can see that the theatrical 108nit trim is very close to the home video 100nit trim. These two trims will be represented very differently due to the theatrical grade being intended for a dark theater vs home viewing with dim surround lighting conditions. Luckily Dolby recognized this and that is why we have separation of church and state now. The process for completing these trims is the same though, only the target changes.

Trim the fat

Saturation plus lift gamma gain is the name of the game. You also have advanced tools for highlight clipping and mid-tone contrast. Additionally, you have very basic secondary controls to manipulate the hue and saturation of the six vectors.

Baselight Dolby trim controls.

Resolve Dolby trim controls.

These secondary controls are very useful when you have extremely saturated colors that are on the boundaries of your gamut. I hope Dolby releases a way to only target the very saturated color values instead of the whole range of a particular vector, but for now, these controls are all we have.

Mid tone offset

Another tool that affects the analysis data but could be considered a trim is the mid-tone offset. A good way to think about this tool is a manual shifting of what your average is. This slides the curve up or down from the midpoint.

I usually find the base analysis and subsequent standard conversion a little thick for my taste. I start by finding a pleasing trim value that works for a majority of shots. Then I ripple that as a starting place and trim from there until I’m happy with the system’s output. The below before and after shows the standard analysis output vs where I ended up with the trim values engaged.

It’s time to export once you are happy with the trims for all of your needed outputs. This is done by exporting the XML recipes that when paired with your PQ master will create all the derivative versions.

XML

Here are two screenshots of where to find the XML export options in Baselight and Resolve.

Rightclick on your timeline -> timelines - >export -> Dolby XML

Shots View -> Gear Icon ->Export Dolby Vision Metadata… This will open a menu to let you choose your location and set primaries for the file.

The key here is to make sure that you are exporting an XML that reflects your deliverable, not your DSM. For example, I typically export PQ P3 D65 tiffs as the graded master files. These are then taken into Transkoder, placed into a rec 2020 container, and married with the XML to create an IMF. It’s important to export a rec2020 XML instead of a P3 one so that when it is applied to your deliverable it yields the intended results. You can always open your XML in a text editor if you are unsure of your declared primaries. I have included a screen grab of what the XML should look like for the Rec2020 primaries on the left and P3 primaries on right. Always go by the numbers because filenames can lie.

Rec2020 XML vs P3 D65

There is beauty in the simplicity of this system. Studios and streamers love the fact there is only one serviceable master. As a colorist, I love the fact that when there is a QC fix you only need to update one set of files and sometimes the XML. That’s a whole lot better than in the height of the 3D craze where you could have up to 12 different masters and that is not even counting the international versions. I remember finishing Katie Perry’s “Part of Me” in 36 different versions. So in retrospect, Dolby did us all a great service by transmuting all of those versions we used to painstakingly create into one manageable XML sidecar file.

Thanks for reading

I bet in the future these trim passes end up going the way of the 4x3 version. Especially with the fantastic HDR displays available from Samsung, Sony, and LG at continually lower price points. Remember the Dolby system only helps you at home if it is something other than what the media was mastered at. Until then, I hope this helps.

Check out this Dolby PDF for more information and deeper dive into the definition of the various XML levels. As always thanks for reading.

-JD

The Sea Beast - High Dynamic Range on the High Seas

Hey everyone, the Sea Beast is out today on Netflix and I am super thrilled to share some of the innovative aspects of the color finishing. I hope you all enjoy reading about the techniques used and the “why” behind some of the choices. It’s a gorgeous film and I’m very grateful to have had the opportunity to contribute my small part.

If it’s not on the page it’s not on the stage

It all starts with a script. In Sea Beast’s case, that script was co-penned by the story’s visionary Director Chris Williams. I’m a big fan of Chris’s past work, so getting the chance to collaborate with him on his latest was exciting, to say the least. He’s sort of animation royalty in my book.

The world was conceived from the minds of two fantastic artists. Matthias Lechner wore the mantle of art director while Woon Jung took up the production designer role. Sony ImageWorks was tasked with bringing the characters to life supervised by Stirling Duguid. Steven Schweickart kept us on time and honest, while Tim Archer supervised the post. Joyce Arrastia was the editor who put it all together. Netflix put together the dream team of animation heavyweights on this one and it shows on the screen.

The World

The look of the world in one word would be painterly. We used Filmlight’s Baselight to “paint” the frame with a lot of soft, atmospheric touches.

Much of the film takes place in humid locations with a lot of volumetric haze. This does two things for the picture. One, it softens what could be a sometimes overly contrasty CG aesthetic. Two, it really plays up the scale of the world by imparting a sense of depth.

We always made sure to play the background a little desaturated and low con. This was inherent in the design and execution of the files coming out of ImageWorks, but was further enhanced in DI with the use of mattes and depth channels.

Cyan blues were also very important, as you can imagine for a movie set out on the sea.

The sky and the weather were closely linked to the dramatic plot points of the story. We made sure that the sky felt like a reflection of the mood that was at play in the scene. One such example was when we darkened the storm clouds in the second act. This gave a threatening feeling as the characters trudged forward in the story.

Shapes used to create an ominous feeling in the clouds.

Enhancement of the Blood moon to create a foreboding presence.

Another important color consideration was the look of one of the main characters named Red. As her name implies, the character is a very saturated red hue. We made sure to play up the complementary nature of the relationship to the opposing cyan sky and ocean using secondary hue swings.

Special attention was made to ensure Red had the right hue for the environment. This meant slightly leaning Red’s skin color to align with the scene. Warmer in sunlight and more towards the cooler side of magenta at night.

A different type of script

The Sky was not the only color consideration when it came to plot. Matthias and Woon came up with a color script that we diligently adhered to. Here is a graphic of the most important hues vs. time.

You can see that as the film is building tension we leave the “pretty colors“ for a more earthen palate. One reference Matthias gave me was “Delicatessen” shot by the great Darius Khondji. I love that film and immediately knew what he was going for. We used the uneasy, green side of yellow to symbolize corruption.

As the character's adventure unfolds we see that certain arcs are “corrupted” by revenge, greed, or just plain ignorance. We linked the intensity of this descent to the intensity of our yellow look that we developed. I achieved this by mixing back the full look with differing levels of opacity to get peaks and valleys over time. I feel that the use of this device goes a long way in helping set the tone of certain moments. The idea is to be felt but barely perceived.

HDR modulation

Early in testing, I received a note from the team that the skies were too busy vs what they designed and were used to seeing. I started to explore what was causing this reaction because I liked the look that I had first presented very much. Once I dug in a bit more, I found that due to COVID restrictions most of the approvals were done in SDR. This was the first time the creatives were seeing what was hiding in their data.

I built an LMT to solve this concern. Up to this point, the show’s pipeline was vanilla ACES. The LMT mimicked what the sRGB output looked like but tone mapped to 1000nit PQ. I started with an exact match. Then I used a simple curve to “stretch” the picture so that the middle grey was mapping correctly. I then rolled off the top end, mapping the specular highs around 600nits. This gave us the feel of the SDR but still took advantage of the extra range.

Simplifying the sky by blending the LMT layer

This was a fantastic way to simplify the sky and get the picture closer to the original artwork. The LMT approach proved to be much more efficient and safer than a key would have been. We also found that in some cases the opposite was true. There are a handful of pivotal moments where maximizing the range was to our benefit. Certain shots hit harder from being more complicated in their HDR rendering. The images shown here in SDR don’t really showcase what is happening +100 nits, but hopefully, you get the idea.

We ended up riding the opacity of the LMT to taste. This gave us one more device that we could use to link the look with the narrative arc. Here is a graph of the “amount” of dynamic range over time.

You can see that the trend line shows we used more range as the film progressed. You might think that the curve would correlate to the action scenes, but I noticed while gathering the data for this graph that it was actually coupled to the scenes with the most emotional weight. This was a first for me but I plan on using this technique on other shows going forward. I have always said just cause you have the range doesn’t mean you need to use it. HDR is like salt, a little goes a long way, and too much ruins the dish. I now feel it’s better to approach the extra range like punctuation that enhances key moments. If everything has an exclamation point it sort of loses its meaning.

Mastering

We spent two weeks on the HDR grade. I’m a big proponent of HDR first. It is your highest quality master and the one that will live on past the others. Once we had the HDR P3D65 1000nit master perfect, we moved on to the Dolby SDR trim. This was pretty straightforward except for a couple of scenes. There is a scene early on with a very flared vibe. The Dolby tools were not allowing us to get to where Chris wanted to play it. I was unable to achieve the toe compression in the shadows that I wanted no matter how I trimmed the lift up and gamma down (I call this the gamma shuffle.) Changing the analysis and the L3 mid data didn’t get us there either. We needed to use the higher precision tools found in the color corrector.

We opened back up the HDR pass I made a curve tweak to the toe, keeping an eye on the SDR output simultaneously. I found something that worked for both and was by no means a sacrifice in the HDR world. Other than those scenes it was business as usual.

We also created a theatrical version for a DCP. We did that pass in a day and a half making slight contrast and saturation tweaks. I did this in the same timeline giving a “Theatrical” category to the stacks that I used specifically for the 2.6 48nit XYZ version. Then in the exports of the other versions, I set this category to “bypass.” There is a deeper dive into this type of workflow in my post on Space Jam a New Legacy under the “multiple deliveries, one timeline” heading.

Archival

We also created an ACES AP0 deliverable in addition to the standard display referred masters. This GAM (Graded Archival Master) had all of the grades plus the LMT baked into a linear gamma EXR with ACES primaries. Standard ACES transforms applied to the GAM will get you back to the display targets where we were in DI. We also created a NAM (Non-Graded Assembly Master) that was only the conform without the grades. Both of these masters can be sourced for remastering in the future. Since I’m a card-carrying member of the organization, People for the Ethical Treatment of Pixels, I can safely say, no pixels were harmed in the making of this motion picture.

Thanks

A big thank you to Leo Ferrini, Paul Lavoie, Chris Coleman, Patrick Brennan, and the entire Warner PPCS staff. This was a super fun swashbuckling project with an amazing team. I’m looking forward to this rich world’s following chapter and to “sea” what’s next for Red, Blue… and green 😉 Thanks for reading, and as usual, reach out with any questions or post in the comments below.

Now go watch The Sea Beast on Netflix today!

Happy Grading,

JD

Kimi - Virtually a Virtual Production

Hey everyone! Steven Soderbergh’s latest “Kimi” is out today on HBO Max. This was a fantastic project to have been a small part of. Here is a quick look at how the color was finished.

Getting the Band Back Together

Firstly, It was great to reunite with Steven and the team. Kimi marks the sixth picture helmed by Soderbergh that I have been credited on. There is a shorthand that has been developed over the years with his core post team consisting of Corey Bayes, Larry Blake, and Peter Mavromates. The speed of this kind of communication and the trust built over the many projects makes for an extremely efficient finish. When the maestro is at the helm, it is not movie-making by committee. Truly just a singular voice of one of our generation’s greatest auteurs.

Another exciting aspect of this project was that it utilized virtual production technology. Bruce Jones supervised the visual effects on the show. I have worked with Bruce going all the way back to Michael Jackson’s “This is it” concert. The Stereoscopic LED portion of that show was directed by Bruce and I did the 3D finishing. Again, there was the benefit from a comfort factor built over many years. The first task was to ensure the plates matched the 3D asset that would be playing back from Unreal. The second task was to tweak the white balance of the plates to ensure they answered back the way Steven was expecting once captured by the RED camera off the LED screen. The color pipe for the screen ended up being REDWG Linear to REDWG log3G10. It was all a bit of voodoo to me after it went into the engine. Unreal is an area I would like to devote more time to in the future. A few years ago I made a VR app in Unity. In hindsight, I should have been tinkering with Unreal. Thankfully the masters at Weta Digital ensured that the footage was tone mapped correctly to the LED display provided by Monolith.

Kimi LED wall background

The Look

Sometimes it’s not the buttons you press but the ones you don’t. When you have a cinematographer as masterful as Peter Andrews the only thing to do is mind the picture. A younger me had to learn this lesson the hard way. Often I felt my worth as a colorist was defined by how much I did to the picture. Over the years I have come to realize that to be truly in service of great cinematography, you just need taste, a tempered hand, and a language for giving cinematographers consistent results. This is one of the reasons why I default to film terminology so often. Most folks know what a stop or one point looks like.

I used the RED ippv2 color science with Filmlight’s basegrade for a majority of the film. There are a few shapes here and there but that’s pretty much it. Soderbergh lights and shoots what he wants.

Mastering

Most of the heavy lifting was done in HDR P3 D65 PQ 1000nits. Second up was the theatrical version which was a tone-mapped derivative off of the PQ master. Once we were at a good place on the projector, we screened in the Steven J. Ross Theater marrying the sound for the complete experience. After those two versions were locked I used the theatrical p3 D65 2.6 gamma output as a reference for the Dolby SDR trim pass. I set up the Baselight in dual cursor output with a p3 to 709 LUT in-line on the reference output. Then I trimmed to get as close as the Dolby tools would allow on the other cursor. There was one last screening to approve the 709 derived off of the XML.

Watch it on HBO Max

Kimi is a fantastic story that taps into the zeitgeist of the world we are living in now. I’m very excited for it to finally be released. Go check it out on HBO Max and let me know what you think.

Happy Grading,

JD

EDL Extension Cleaner

I needed to remove the extensions from the filenames in an edl today, so I wrote this right quick. I’m sure some of you out there will need it too or at the very least a template to do something more complicated. It’s written for Python 2.6 and up.

import os

import sys

import re

import string

###v3 changed to work only on lines that start with 00

import re

def process_text(input_filename, output_filename):

"""Removes extra characters from filenames in the input file and writes the processed text to the output file."""

with open(input_filename, 'r') as input_file: # Open input file separately

with open(output_filename, 'w') as output_file: # Open output file separately

for line in input_file:

if line.startswith("00"):

filename = line.split()[1]

#print(filename)

short_filename = filename.split('.')[0]

print(short_filename)

line = line.replace(filename, short_filename)

output_file.write(line)

if __name__ == "__main__":

input_filename = sys.argv[1] # grabs file from cmd line

output_filename = input_filename.split('.')[0] + "_cleaned.edl" # Use string concatenation for Python 2.6

process_text(input_filename, output_filename)

print("File processed successfully! Output saved to: %s" % output_filename) # Use %-formatting for Python 2.6Happy Grading,

JD