Hey everybody! Here is a video that Filmlight just released on their website. It’s a great series and I’m happy to have contributed my little bit. Let me know what you think.

VHS Shader

A show that I’m currently working on was asking about a VHS look. It made me think of a sequence I did a while ago for Keanu shot by Jas Shelton. In This sequence, we needed to add Keegan-Michael Key to the Faith music video by George Michael.

Often, as colorists, we are looking to make the image look the best it can. This was a fun sequence because stepping on an image to fit a creative mood or period is sometimes harder then making it shinny and beautiful. When done correctly (I’m looking at you Tim and Eric Awesome Show Great Job) it can be a very effective tool for storytelling.

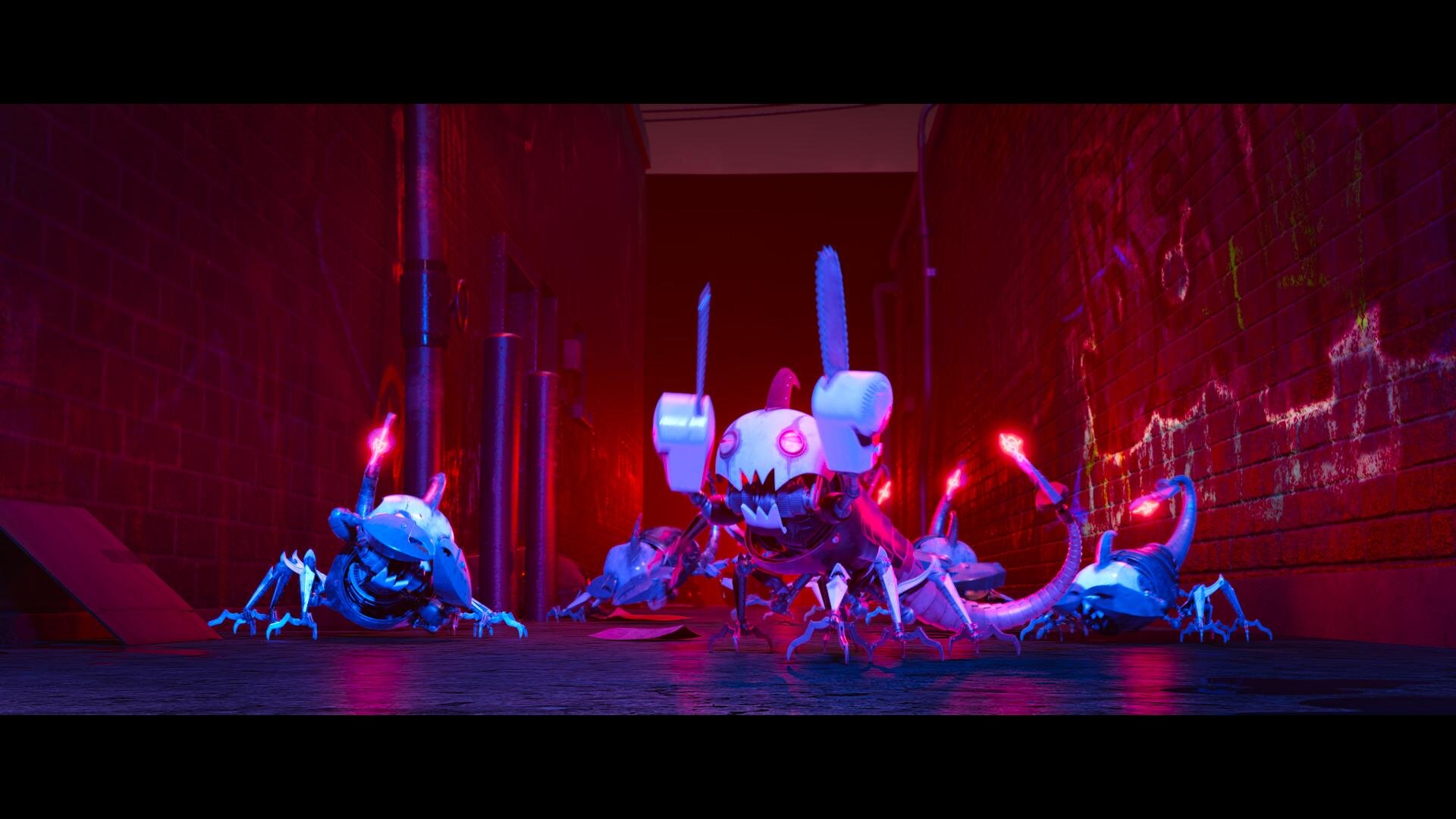

We uprezed the Digibeta of the music video. I then used a custom shader to add the VHS distress to the Arri Alexa footage of Key. I mixed the shader in using opacity until it felt like it was a good match. Jas and I wanted to go farther, but we got some push back from the suits. I think in the end the sequence came out great and is one of my favorite parts of the film… and the gangster kitty of course! Please find the shader used below if you would like to play.

alllll awwwww Keanu!

The War With Grandpa

Hey everyone! Here is a project I graded prior to the shutdown. The picture is eyeing an October 9th release. Fingers crossed we are in a better place with COVID by then. Check out the link below.

Finishing Scoob!

“Scoob!” is out today. Check out my work on the latest Warner Animation title. Here are some highlights and examples of how we pushed the envelope of what is possible in color today.

Building the Look

ReelFX was the animation house tasked with bringing “Scoob!” from the boards to the screen. I had previously worked with them on “Rock Dog” so there was a bit of a shorthand already in place. I already had a working understanding of their pipeline and the capabilities of their team. When I came on-board, production was quite far along with the show look. Michael Kurinsky (Production Designer) had already been iterating through versions addressing lighting notes from Tony Cervone (Director) through a LUT that ReelFX had created. This was different from “Smallfoot” where I had been brought on during lighting and helped in the general look creation from a much earlier stage. The color pipeline for “Scoob!” was Linear Arri Wide Gamut EXR files -> Log C Wide Gamut working space ->Show LUT -> sRGB/709. Luckily for me, I would have recommended something very similar. One challenge was the LUT was only a forward transform with no inverse and only built for rec.709 primaries. We needed to recreate this look targeting P3 2.6 and ultimately rec.2020 PQ.

Transform Generation

Those of you that know me, know that I kind of hate LUTs. My preference is to use curves and functional math whenever possible. This is heavier on the GPUs but with today’s crop of ultra-fast processing cards, it hardly matters. So, my first step was to take ReelFX’s LUT and match the transform using curves. I went back and forth with Mike Fortner from ReelFX until we had an acceptable match.

My next task was to take our new functional forward transform and build an inverse. This is achieved by finding the delta from a 1.0 slope and multiplying that value by a -1. Inverse transforms are very necessary for today’s deliverable climate. For starters, you will often receive graphics, logos, and titles in display referred spaces such as P3 2.6 or rec.709. The inverse show LUT allows you to place these into your working space.

Curve and it’s inverse function

After the Inverse was built, I started to work on the additional color spaces I would be asked to deliver. This included the various forward transforms to p3 2.6 for theatrical, rec.2020 limited to P3 with a PQ curve for HDR, and rec.709 for web/marketing needs. I took all of these transforms and baked them into a family DRT. This is a feature in Baselight where the software will automatically use the correct transform based on your output. A lot of work up front, but a huge time saver on the back end; plus less margin for error since it is set programmatically.

Trailers First

The first piece that I colored with the team were the trailers. This was great since it afforded us the opportunity to start developing workflows that we would use on the feature.

My friend in the creative marketing world once said to me “I always feel like the trailer is used as the test.” That’s probably because the trailer is the first picture that anybody will see. You need to make sure it’s right before it’s released to the world.

Conform

Conform is one aspect of the project where we blazed new paths. It’s common to have 50 to 60 versions of a shot as it gets ever more refined and polished through the process. This doesn’t just happen in animation. Live-action shows with lots of VFX (read: photo-real animation) go through this same process.

We worked with Filmlight to develop a workflow where the version tracking was automated. In the past, you would need an editor to be re-conforming or hand dropping in shots as new versions came in. On “Scoob!”, a database was queried and the correct shot if available was automatically placed in the timeline. Otherwise, if not available, the machine would use the latest version delivered to keep us grading until the final arrives. This saves a huge amount of time (read: money).

Grading

Coloring for animation

I often hear, “It’s animation… doesn’t it come to you already correct?” Well, yes and no. What we do in the bay for animation shows is color enhancement; not color correction. Often, we are taking what was rendered and getting it that last mile to where the Director, Production Designer, and Art Director envisioned the image to be.

This includes windows and lighting tricks to direct the eye and enhance the story. Also, the use of secondaries to further stretch the distance between two complementary colors, effectively adding more color contrast. Speaking of contrast, it was very important to Tony, that we never were too crunchy. He always wanted to see into the blacks.

These were the primary considerations when coloring “Scoob!” Take what is there and make it work the best it can to promote the story the director is telling. Which takes me to my next tool and technique that was used extensively.

Deep Pixels and Depth Mattes

I’ve always said, if you want to know what we will be doing in color five years from now, look at what VFX is doing today. Five years ago in VFX deep pixels or voxels as they are sometimes referred, was all the rage. Today they are a standard part of any VFX or Animation pipeline. Often they are thrown away because color correctors either couldn’t use them or it was too cumbersome. Filmlight has recently developed tools that allow me to take color grading to a whole other dimension.

A standard pixel has 5 values R,G,B and XY. A Voxel has 6 values RGB and XYZ. Basically for each pixel in a frame, there is another value that describes where it is in space. This allows me to “select” a slice of the image to change or enhance.

This matte also works with my other 2D qualifiers turning my circles and squares into spheres and cubes. This allows for corrections like “more contrast but only to the foreground” or desaturate the character behind Scooby, but in front of Velma.

Using the depth mattes along with my other traditional qualifiers all but eliminated the need for standard alpha style mattes. This not only saves a ton of time in color since I’m only dealing with one matte but also generates savings in other departments. For example with fewer mattes, your EXR file size is substantially smaller, saving on data management costs. Additionally, on the vendor side, ReelFX only had to render one additional pass for color instead of a matte per character. Again, a huge saving of resources.

I’m super proud of what we were able to accomplish on “Scoob!” using this technique and I can’t wait to see what comes next as this becomes standard for VFX deliveries. A big thank you to ReelFX for being so accommodating to my mad scientist requests.

Corona Time

Luckily, we were done with the theatrical grade before the pandemic hit. Unfortunately, we were far from finished. We were still owed the last stragglers from ReelFX and had yet to start the HDR grade.

Remote Work

We proceeded to set up a series of remote options. First, we set up a calibrated display at Kurinsky’s house. Next, I upgraded my connection to my home color system to allow for faster upload speeds. A streaming session would have been best but we felt that would put too many folks in close contact since it does take a bit of setup. Instead, I rendered out high-quality Prores XQ files. Kurinsky would then give notes on the reels over Zoom or email. I would make changes, rinse and repeat. For HDR, Kurinsky and I worked off a pair of x300s. One monitor was set for 1000nit rec.2020 PQ and the other for the 100nit 709 Dolby trim pass. I also made H.265 files that would play off a thumb drive once plugged into an LG E-series OLED. Finally, Tony approved the 1.78 pan and scan in the same way.

I’m very impressed with how the whole team managed to not only complete this film but finish it to the highest standards under incredibly trying times. An extra big thank you to my right-hand man Leo Ferrini who was nothing but exceptional during this whole project. Also, my partner in crime, Paul Lavoie, whom I have worked with for over 20 years. Even though he was at home, it felt like he was right there with me. Another big thanks.

Check Out the Work

Check out the movie at the link below and tell me what you think.

Thanks for reading!

-John Daro

SCOOB! and Best Friends Animal Society

It's not a mystery, everyone needs a best friend! I couldn’t imagine life without my little man Toshi! Watch Shaggy meet his new friend, rescued dog Scooby Doo, in this new PSA from @BestFriendsAnimalSociety. And you can enjoy Exclusive Early Access to the new animated movie @SCCOB with Home Premiere! Available to own May 15th.

@scoob #SCOOB @bestfriendsanimalsociety #SaveThemAll #fosteringsaveslives #thelabellefoundation

SCOOB!

My Dog Toshi

A Huge thank you to TheLabelleFoundation for bringing us together!

How To - AAF and EDL Export

AAF and EDL Exporting for Colorists

Here is a quick howto on exporting AAFs and EDLs from an Avid bin. Disclaimer - This is for colorists, not editors!

Exporting an AAF:

First, open your project. Be sure to set the frame rate correctly if you are starting a new project or importing a bin from another.

Next, open the bin that contains the sequence you want to export an AAF from.

Select the timeline and right click it. This sequence should already be cleaned for color. Meaning, camera source on v1, opticals on v2, speed fx on v3, vfx on v4, titles and graphics on v5.

After you right click, go “Output” -> “Export to File”

Navigate to the path that you want to export to.. Then, click “Options”

In the “Export As” pulldown select “AAF.” Next, un-check “Include Audio Tracks in Sequence” and make sure “Export Method:” is set to “Link to(Don’t Export) Media.” Then click “Save” to save the settings and return to the file browser.

Give the AAF a name and hit “Save.” That’s it! Your AAF is sitting on the disk now.

Exporting an EDL:

We should all be using AAFs to make our conform lives easier, but if you need an EDL for a particular piece of software or just want something that is easily read by a human, here you go.

Setup the project and import your bin the same as an AAF. Instead of right-clicking on the sequence, go -> “Tools” -> “List Tool” and it will open a new window. I’m probably dating my self, but back in my day, this was called “EDL Manager.” List Tool is a huge improvement since it lets you export multi-track EDLs quickly.

Select “File_129” from the “Output Format:” pull-down. This sets the tape name to 129 characters (128+0 =129) which is the limit for a filename in most operating systems. Next, click the tracks you want to export.

Double-click your sequence in the bin to load your timeline into the record monitor. Then click “Load” in the “List Tool” window. At this point, you can click “Preview” to see your EDL in the “Master EDL” tab. To save, click the “Save List” pull-down and choose “To several files.” This option will make one EDL per video track. Choose your file location in the browser and hit save. That’s it. Your EDLs are ready for conforming or notching.

Alternate Software EDL Export

That’s great John, but what if I’m using something else other than Avid?

Here are the methods for EDL exports in Filmlight’s Baselight, BMD DaVinci Resolve, Adobe Premiere Pro, and SGO Mistika in rapid-fire. If you are using anything else… please stop.

Baselight

Open “Shots” view(Win + H) and click the gear pull-down. Next click “Export EDL.” The exported EDL will respect any filters you may have in “Shots” view, which makes it a very powerful tool, but also something to keep an eye on.

Resolve

In the media manager, right-click your timeline and select “Timelines“ -> “Export“ -> “AAF/XML/EDL“

Premiere Pro

Make sure your “Tape Name” column is populated.

Make sure to have your Timeline selected. Then go, “File“ -> “Export“ -> “EDL“

The most important setting here is “32 character names.” Sometimes this is called “File32” in other software. Checking this insures the file name in it’s entirety(as long as it’s not longer then 32 characters) will be placed into the tape id location of the EDL

Mistika

Set your marks in the Timespace where you want the EDL to begin and end. Then select “Media“ -> “Output“ -> “Export EDL2” -> “Export EDL.“ Once pressed you will see a preview of the EDL on the right.

No matter what the software is, the same rules apply for exporting.

Clean your timeline of unused tracks and clips.

Ensure that your program has leader and starts at an hour or ##:59:52:00

Camera source on v1, Opticals on v2, Speed FX on v3, VFX on v4, Titles and Graphics on v5

Many of us are running lean right now. I hope this helps the folks out there who are working remotely without support and the colorists who don’t fancy editorial or perhaps haven’t touched those tools in a while.

Happy Grading!

JD

Brockmire Season 4 Starts Today

Supervised from pre-production through delivery. A total binge-worthy show. Perfect for these crazy times. A great example of collaboration between Atlanta and Burbank facilities.

3D - A Beginners Guide to Stereoscopic Understanding

Early Days

My interest in Stereoscopic imaging started in 2006. One of my close friends, Trevor Enoch, showed me a stereo-graph that was taken of him while out at Burning Man. I was blown away and immediately hooked. I spent the next four years experimenting with techniques to create the best, most comfortable, and immersive 3D I could. In 2007, I worked on “Hannah Montana and Miley Cyrus: Best of Both Worlds Concert” directed by Bruce Hendricks and shot by cameras provided by Pace. Jim Cameron and Vince Pace were already developing the capture systems for the first “Avatar” film. The challenge was that a software package had yet to be created to post stereo footage. To work around this limitation, Bill Schultz and I slaved two Quantel IQ machines to a Bufbox to control the two color correctors simultaneously. This solution was totally inelegant but it was enough to award us the job from Disney. Later during the production, Quantel came out with stereo support eliminating the need to color each eye on independent machines.

We did what we had to in those early days. When I look back at that film, there is a lot that I would do differently now. It was truly the wild west of 3D post and we were writing the rules (and the code for the software) as we went. Over the next few pages I’m going to layout some basics of 3D stereo imaging. The goal is to have a working understanding of the process and technical jargon by the end . Hopefully I can help some other post professionals avoid a lot the pitfalls and mistakes I made as we blazed the trail all those years ago.

Camera 1, Camera 2

Stereopsis is the term that describes how we collect depth information from our surroundings using our sight. Most everyone is familiar with stereo sound; when two separate audio tracks are played simultaneously out of two different speakers. We can take that information in using both of our ears (binaural hearing) and create a reasonable approximation from the direction of where that sound is coming from in space. This approximation is calculated by the offset in time of the sound hitting one ear vs the other.

Stereoscopic vision works much in the same way. Our eyes have a point of interest. When that point of interest is very far away our eyes are parallel to one another. As we focus on objects that are closer to us, our eyes converge. Do this simple experiment right now. Hold up your finger as far away from your face as you can. Now slowly bring that finger towards your nose, noting the angle of your eyes as you get closer to your face. Once your finger is about 3 inches away from your face, alternately close one eye and then the other. Notice the view as you alternate between your eyes, camera 1, camera 2, camera 1, camera 2. Your finger moves position from left to right. You also see “around” your finger more in one eye vs the other. This offset between your two eyes is how your brain makes sense of the 3D world around you. To capture this depth for films we need to recreate this system by utilizing two cameras roughly the same distance as your eyes.

Camera Rigs

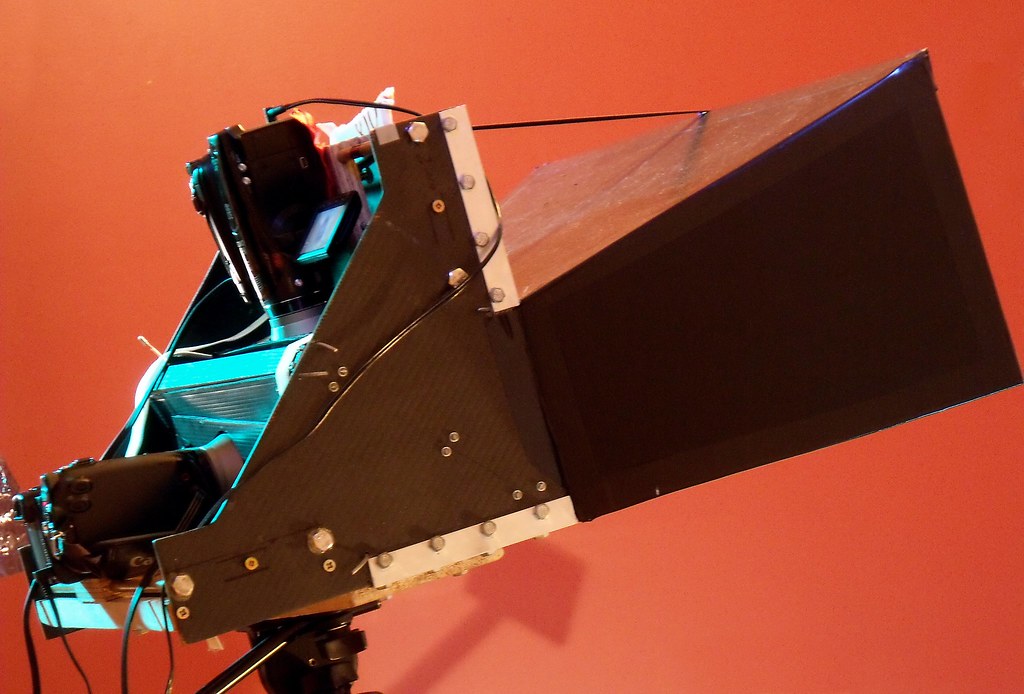

The average interpupillary distance is 64mm. Since most feature grade cinema cameras are rather large, special rigs for aligning them together need to be employed. Side by side rigs are an option when your cameras are small, but when they are not you need to use a beam splitter configuration.

Beam splitter rig in an “over” configuration.

Essentially, a beam splitter rig uses a half silvered mirror to “split” the view into two. This allows the cameras to shoot at a much closer inter-axial distance than they would otherwise be able to using a parallel side by side rig. Both of these capture systems are for the practical shooting of 3D films . Fortunately or unfortunately most 3D films today use a technique called Stereo Conversion.

Image comes in from position 1. Passes through to camera at position 2. It is also reflected to the camera at position 3. You will need to flip it in post since the image is mirrored.

Conversion

There are three main techniques for Stereo Conversion.

Roto and Shift

In this technique, characters and objects in the frame are roto’d out and placed in a 3D composite in virtual space. The scene is then re-photographed using a pair of virtual cameras. The down side to this is that the layers often lack volume and the overall effect feels like a grade school diorama.

Projection

For this method, the 2D shot is modeled in 3D space. Then, the original 2D video is projected onto the 3D models and re-photographed using a pair of virtual cameras. This yields very convincing stereo and looks great, but can be expensive to generate the assets needed to create complex scenes.

Virtual World

Stupid name, but I can’t really think of anything better. In this technique, scenes are created entirely in 3D programs like Maya or 3DS Max. As this is how most high end VFX are created for larger films,some of this work is already done. This is the best way to “create” Stereo images since the volumes, depth and occlusions are mimicking the real world. The downside to this is that if your 2D VFX shot took a week to render in all of its ray traced glory, your extra “eye” will take the same.

Cartesian Plane

No matter how you acquire your stereo images eventually you are going to take them into post production. In Post, I make sure the eyes are balanced for color between one another. I also “set depth” for comfort and to creatively promote the narrative.

In order to set depth we will have to offset one eye against the other. Objects in space gain their depth from the relative offset in the other eye/view. In order to have a consistent language, we speak in number of pixels offset to describe this depth.

When we discus 2D images we use pixel values that are parrell with the screen. A given cordinate pair locates the pixel along the screens surface.

Once we add the 3rd axis we need to think of a Cartesian plane laying down perpendicular to the screen. Positive numbers are receding away from the viewer into the screen. Negative numbers come off the screen towards the viewer.

The two views are combined for the viewing system. The three major systems are Dolby, RealD, and Expand. There are others, but these are the most prevalent in theatrical exhibition.

In Post we control the relative offset between the two views using a “HIT” or horizontal image transform. A very complicated way for saying we move one eye right or left along the X axis

The value of the offset dictates where in space the object will appear. This rectangle is traveling from +3 pixels offset to -6 pixels offset.

Often we will apply this move symmetrically to both eyes. In other words to achieve a -6 pixels offset, we may move both views -3 instead of one view moving -6.

Using this offset we can begin to move comped elements or the entire “world” in Z space. This is called depth grading. Much like color, our goal is to try and make the picture feel consistent without big jumps in depth. Too many large jumps can cause eye strain and headaches. My First rule of depth grading is “do no harm.” Pain should be avoided at all costs. However, there is another aspect of depth grading beyond the technical side. Often we use depth to promote the narrative. For example, you may pull action forward to be more immersed in the chaos, or you can play quite drama scenes at screen plane so that you don’t take away from performance. Establishing shots are best played deep for a sense of scale. Now all of these examples are just suggestions and not rules. Just my approach.

Once you know the rules, you are allowed to break them as long as it’s motivated by what’s on screen. I remember one particular shot in Jackass 3D where Bam gets his junk whacked. I pop’ed the offset towards the audience just for that frame. I doubt anybody noticed other then a select circle of 3D nerds (I’m looking at you Captain 3D) but I felt it was effective to make the pain on screen “felt” by the viewer.

Floating Windows

Floating Windows are another tool that we have at our disposal while working on the depth grade. When we “Float the Window” what we are actually doing is controlling the proscenium in depth just like we were moving the “world” while depth grading. Much like depth offsets, floating windows can be used for technical and creative reasons. Firstly, they are most commonly used for edge violations. An edge violation is where there is an object that is “in front” of the screen in Z space, but is being occluded by the screen. Now our brains are smarter than our eyeballs and kick into over-ride mode. The edge of the broken picture feels uncomfortable and all sense of depth is lost. What we do to fix this situation is move the edge of the screen forward into the theater using a negative offset. This floats the “window” we are looking through in front of the offending object and our eyes and brain are happy again.

We achieve a floating window through a crop or by using the software’s “window” tool.

The fish is at +1 behind screen, but in front of the +3 proscenium. The fish will have the feeling of being off screen even though it’s behind.

Another use for controlling the depth of the proscenium is to creatively enhance the perceived depth. Often, you need to keep a shot at a certain depth due to what is on either side of the cut but creatively want it to feel more forward. A great work around is to keep your subject at the depth that feels comfortable to the surrounding shots and move the “screen” back into positive space. This can have the effect of feeling as if the subject is in negative space without actually having to place them there. Conversely you can float the window into negative space on both sides to create the feeling of distance even if your character or scene is at screen plane with a zero offset.

Stereo Color Grading

Stereo color grading is an additional step, when compared to standard 2D finishing, which needs to be accomplished after the depth grade is complete. Native shot 3D footage is much more challenging to match color from one eye to another. Reflections or flares may appear in one and not the other. We call this retinal conflict. One fix for such problems is to the steal the “clean” information from one eye and comp it over the offending one paying mind to offset for the correct depth.

Additionally, any shapes that were used in the 2D grade will have to be offset for depth. Most professional color grading software has automated ways to do this. In rare instances, an overall color correction is not enough to balance the eyes. When this occurs, you may need a localized block based color match like the one found in the Foundry’s Ocula plugin for Nuke.

Typically a 4.5FL and a 7FL master are created with different trim values. In recent years, a 14FL version is also created for stereo laser projection and Dolby’s HDR projector. In most cases this is as simple a gamma curve and a sat boost.

The Future of Stereo Exibihition

The future for 3D resides in even deeper immersive experiences. VR screens are becoming higher in resolution and, paired with accelerometers, are providing a true be “there” experience. I feel that the glasses and apparatus that are required for stereo viewing also contributed to it’s falling out of vogue in recent years. I’m hopeful that new technological enhancements and a better, more easily accessible user experience will lead to another resurgence in the coming years. Ultimately, creating the most immersive content is a worthy goal. Thanks for reading and please leave a comment with any questions or differing views. They are always welcome.

WRITTEN BY

John Daro

The Rook :: A Global Collaboration

Here is the latest trailer for the Rook. This show was graded as a collaboration between Asa Shoul at WB De Lane Lea in London and John Daro at Warner Color in Burbank. Mastered in Dolby Vision HDR. Check it out June 30th on Starz.

The Last Summer Trailer →

Strong grade, proud of this one. Cinematography by Luca Del Puppo. Colored by John Daro.

A Brief History of HDR

A brief history of John Daro’s experience creating HDR moving images.

Read More"Walk. Ride Rodeo." out today on Netflix

HDR - Flavors and Best Practices (Copy)

Better Pixels.

Over the last decade we have had a bit of a renaissance in imaging display technology. The jump from SD to HD was a huge bump in image quality. HD to 4k was another noticeable step in making better pictures, but had less of an impact from the previous SD to HD jump. Now we are starting to see 8k displays and workflows. Although this is great for very large screens, this jump has diminishing returns for smaller viewing environments. In my opinion, we are to the point where we do not need more pixels, but better ones. HDR or High Dynamic Range images along with wider color gamuts are allowing us to deliver that next major increase in image quality. HDR delivers better pixels!

Stop… What is dynamic range?

When we talk about the dynamic range of a particular capture system, what we are referring to is the delta between the blackest shadow and the brightest highlight captured. This is measured in Stops typically with a light-meter. A Stop is a doubling or a halving of light. This power of 2 way of measuring light is perfect for its correlation to our eyes logarithmic nature. Your eyeballs never “clip” and a perfect HDR system shouldn’t either. The brighter we go the harder it becomes to see differences but we never hit a limit.

Unfortunately digital camera senors do not work in the same way as our eyeballs. Digital sensors have a linear response, a gamma of 1.0 and do clip. Most high-end cameras convert this linear signal to a logarithmic one for post manipulation.

I was never a huge calculus buff but this one thought experiment has served me well over the years.

Say you are at one side of the room. How many steps will it take to get to the wall if each time you take a step, the step is half the distance of your last. This is the idea behind logarithmic curves.

It will take an infinite number of steps to reach the wall, since we can always half the half.

Someday we will be able to account for every photon in a scene, but until that sensor is made we need to work within the confines of the range that can be captured

For example if the darkest part of a sampled image are the shadows and the brightest part is 8 stops brighter, that means we have a range of 8 stops for that image. The way we expose a sensor or a piece of celluloid changes based on a combination of factors. This includes aperture, exposure time and the general sensitivity of the imaging system. Depending on how you set these variables you can move the total range up or down in the scene.

Let’s say you had a scene range of 16 stops. This goes from the darkest shadow to direct hot sun. Our imaging device in this example can only handle 8 of the 16 present stops. We can shift the exposure to be weighted towards the shadows, the highlights, or the Goldilocks sweet spot in the middle. There is no right or wrong way to set this range. It just needs to yield the picture that helps to promote the story you are trying to tell in the shot. A 16bit EXR file can handle 32 stops of range. Much more than any capture system can deliver currently.

Latitude is how far you can recover a picture from over or under exposure. Often latitude is conflated with dynamic range. In rare cases they are the same but more often than not your latitude is less then the available dynamic range.

Film, the original HDR system.

Film from its creation always captured more information than could be printed. Contemporary stocks have a dynamic range of 12 stops. When you print that film you have to pick the best 8 stops to show via printing with more or less light. The extra dynamic range was there in the negative but was limited by the display technology.

Flash forward to our digital cameras today. Cameras form Arri, Red, Blackmagic, Sony all boast dynamic ranges over 13 stops. The challenge has always been the display environment. This is why we need to start thinking of cameras not as the image creators but more as the photon collectors for the scene at the time of capture. The image is then “mapped” to your display creatively.

Scene referred grading.

The problem has always been how do we fit 10 pounds of chicken into an 8 pound bag? In the past when working with these HDR camera negatives we were limited to the range of the display technology being used. The monitors and projectors before their HDR counterparts couldn’t “display” everything that was captured on set even though we had more information to show. We would color the image to look good on the device for which we were mastering. “Display Referred Grading,” as this is called, limits your range and bakes in the gamma of the display you are coloring on. This was fine when the only two mediums were SDR TV and theatrical digital projection. The difference between 2.4 video gamma and 2.6 theatrical gamma was small enough that you could make a master meant for one look good on the other with some simple gamma math. Today the deliverables and masters are numerous with many different display gammas required. So before we even start talking about HDR, our grading space needs to be “Scene Referred.” What this means is that once we have captured the data on set, we pass it through the rest of the pipeline non-destructively, maintaining the relationship to the original scene lighting conditions. “No pixels were harmed in the making of this major motion picture.” is a personal mantra of mine.

I’ll add the tone curve later.

There are many different ways of working scene-referred. the VFX industry has been working this way for decades. The key point is we need to have a processing space that is large enough to handle the camera data without hitting the boundaries i.e. clipping or crushing in any of the channels. This “bucket” also has to have enough samples (bit-depth) to be able to withstand aggressive transforms. 10-bits are not enough for HDR grading. We need to be working in a full 16-bit floating point.

This is a bit of an exaggeration, but it illustrates the point. Many believe that a 10 bit signal is sufficient enough for HDR. I think for color work 16 bit is necessary. This ensures we have enough steps to adequately describe our meat and potatoes part of the image in addition to the extra highlight data at the top half of the code values.

Bit-depth is like butter on bread. Not enough and you get gaps in your tonal gradients. We want a nice smooth spread on our waveforms.

Now that we have our non destructive working space we use transforms or LUTs to map to our displays for mastering. ACES is a good starting point for a working space and a set of standardized transforms, since it works scene referenced and is always non destructive if implemented properly. The gist of this workflow is that the sensor linearity of the original camera data has been retained. We are simply adding our display curve for our various different masters.

Stops measure scenes, Nits measure displays.

For measuring light on set we use stops. For displays we use a measurement unit called a nit. Nits are a measure of peak brightness not dynamic range. A nit is equal to 1 cd/m2. I’m not sure why there is two units with different nomenclature for the same measurement, but for displays we use the nit. Perhaps candelas per meter squared, was just too much of a mouthful. A typical SDR monitor has a brightness of 100 nits. A typical theatrical projector has a brightness of 48 nits. There is no set standard for what is considered HDR brightness. I consider anything over 600nits HDR. 1000nits or 10 times brighter than legacy SDR displays is what most HDR projects are mastered to. The Dolby Pulsar monitor is capable of displaying 4000 nits which is the highest achievable today. The PQ signal accommodates values up to 10,000 nits

The Sony x300 has a peak brightness of 1000 nits and is current gold standard for reference monitors.

The Dolby Pulsar is capable of 4000 nit peak white

P-What?

Rec2020 color primaries with a D65 white point

The most common scale to store HDR data is the PQ Electro-Optical Transfer Function. PQ stands for perceptual quantizer. the PQ EOTF was standardized when SMPTE published the transfer function as SMPTE ST 2084. The color primaries most often associated with PQ are rec2020. BT.2100 is used when you pair the two, PQ transfer function with rec2020 primaries and a D65 white point. This is similar to how the definition of BT.1886 is rec709 primaries with an implicit 2.4 gamma and a D65 white point. It is possible to have a PQ file with different primaries than rec2020. The most common variance would be P3 primaries with a D65 white point. Ok, sorry for the nerdy jargon but now we are all on the same page.

HDR Flavors

There are four main HDR flavors in use currently. All of them use a logarithmic approach to retain the maxim amount of information in the highlights.

Dolby Vision

Dolby Vision is the most common flavor of HDR out in the field today. The system works in three parts. First you start with your master that has been graded using the PQ EOTF. Next you “analyse“ the shots in in your project to attach metadata about where the shadows, highlights and meat and potatoes of your image are sitting. This is considered layer 1 metadata. Next this metadata is used to inform the Content Mapping Unit or CMU how best to “convert” your picture to SDR and lower nit formats. The colorist can “override” this auto conversion using a trim that is then stored in layer 2 metadata commonly referred to as L2. The trims you can make include lift gamma gain and sat. In version 4.0 out now, Dolby has given us the tools to also have secondary controls for six vector hue and sat. Once all of these settings have been programmed they are exported into an XML sidecar file that travels with the original master. Using this metadata, a Dolby vision equipped display can use the trim information to tailor the presentation to accommodate the max nits it is capable of displaying on a frame by frame basis.

HDR 10

HDR 10 is the simplest of the PQ flavors. The grade is done using the PQ EOTF. Then the entire show is analysed. The average brightness and peak brightness are calculated. These two metadata points are called MaxCLL - Maximum Content Light Level and MaxFALL - Maximum Frame Average Light Level. Using these a down stream display can adjust the overall brightness of the program to accommodate the displays max brightness.

HDR 10+

HDR 10+ is similar to Dolby Vision in that you analyse your shots and can set a trim that travels in metadata per shot. The difference is you do not have any color controls. You can adjust points on a curve for a better tone map. These trims are exported as an XML file from your color corrector.

HLG

Hybrid log gamma is a logarithmic extension of the standard 2.4 gamma curve of legacy displays. The lower half of the code values use 2.4 gamma and the top half use log curve. Combing the legacy gamma with a log curve for the HDR highlights is what makes this a hybrid system. This version of HDR is backwards compatible with existing display and terrestrial broadcast distribution. There is no dynamic quantification of the signal. The display just shows as much of the signal as it can.

Deliverables

Deliverables change from studio to studio. I will list the most common ones here that are on virtually every delivery instruction document. Depending on the studio, the names of these deliverables will change but the utility of them stays the same.

PQ 16-bit Tiffs

This is the primary HDR deliverable and derives some of the other masters on the list. These files typically have a D65 white point and are either Rec2020 or p3 limited inside of a Rec2020 container.

GAM

The Graded Archival Master has all of the color work baked in but does not have the any output transforms. This master can come in three flavors all of which are scene referred;

ACES AP0 - Linear gamma 1.0 with ACES primaries, sometimes called ACES prime.

Camera Log - The original camera log encoding with the camera’s native primaries. For example, for Alexa, this would be LogC Arri Wide Gamut.

Camera Linear - This flavor has the camera’s original primaries with a linear gamma 1.0

NAM

The non-graded assembly master is the equivalent of the VAM back in the day. It is just the edit with no color correction. This master needs to be delivered in the same flavor that your GAM was.

ProRes XQ

This is the highest quality ProRes. It can hold 12-bits per image channel and was built with HDR in mind.

Dolby XML

This XML file contains all of the analysis and trim decisions. For QC purposes it needs to be able to pass a check from Dolby’s own QC tool Metafier.

IMF

Inter-operable Master Format files can do a lot. For the scope of this article we are only going to touch on the HDR delivery side. The IMF is created from an MXF made from jpeg 2000s. The jp2k files typically come from the PQ tiff master. It is at this point that the XML file is married with picture to create one nice package for distribution.

Near Future

Currently we master for theatrical first for features. In the near future I see the “flippening” occurring. I would much rather spend the bulk of the grading time on the highest quality master rather than the 48nit limited range theatrical pass. I feel like you get a better SDR version by starting with the HDR since you have already corrected any contamination that might have been in the extreme shadows or highlights. Then you spend a few days “trimming” the theatrical SDR for the theaters. The DCP standard is in desperate need of a refresh. 250Mbps is not enough for HDR or high resolution masters. For the first time in film history you can get a better picture in your living room than most cinemas. This of course is changing and changing fast.

Sony and Samsung both have HDR cinema solutions that are poised to revolutionize the way we watch movies. Samsung has their 34 foot onyx system which is capable of 400nit theatrical exhibition. You can see a proof of concept model in action today if you live in the LA area. Check it out at the Pacific Theatres Winnetka in Chatsworth.

Sony has, in my opinion, the wining solution at the moment. They have a their CLED wall which is capable of delivering 800 nits in a theatrical setting. These types of displays open up possibilities for filmmakers to use a whole new type of cinematic language without sacrificing any of the legacy story telling devices we have used in the past.

For example, this would be the first time in the history of film where you could effect a physiologic change to the viewer. I have often thought about a shot I graded for The “Boxtrolls” where the main character, Eggs, comes out from a whole life spent in the sewers. I cheated an effect where the viewers eyes were adjusting to a overly bright world. To achieve this I cranked the brightness and blurred the image slightly . I faded this adjustment off over many shots until your eye “adjusted” back to normal. The theatrical grade was done at 48nits. At this light level, even at it’s brightest the human eye is not iris’ed down at all, but what if I had more range at my disposal. Today I would crank that shot until it made the audiences irises close down. Then over the next few shots the audience would adjust back to the “new brighter scene and it would appear normal. That initial shock would be similar to the real world shock of coming from a dark environment to a bright one.

Another such example that I would like to revisit is the myth of “L’Arrivée d’un train en gare de La Ciotat.” In this early Lumière picture a train pulls into a station. The urban legend is that this film had audiences jumping out of their seats and ducking for cover as the train comes hurling towards them. Imagine if we set up the same shot today but in a dark tunnel. We could make the head light so bright in HDR that coupled with the sound of a rushing train would cause most viewers, at the very least, to look away as it rushes past. A 1000 nit peak after your eyes have been acclimated to the dark can appear shockingly bright.

I’m excited for these and other examples yet to be created by filmmakers exploring this new medium. Here’s to better pixels and constantly progressing the art and science of moving images!

Please leave a comment below if there are points you disagree with or have any differing views on the topics discussed here.

Thanks for reading,